SpAtten: Efficient Sparse Attention Architecture with Cascade Token and Head Pruning

In revisiting the foundational concepts of Transformers and attention mechanisms, which we’ve discussed in previous articles, the introduction to the paper on SpAtten sheds new light on these critical elements in the landscape of natural language processing (NLP). This section provides a deeper understanding of how SpAtten innovatively addresses the inefficiencies inherent in traditional attention mechanisms, despite their transformative impact on NLP models.

Introduction

As we’ve discussed before, attention mechanisms have been a game-changer in NLP, enabling transformer-based models like BERT, GPT etc. to achieve unprecedented levels of language understanding and generation. These mechanisms excel by dynamically focusing on different parts of input data, mimicking the human ability to concentrate on relevant information while reading or listening.

However, as noted in the SpAtten paper, this capability does not come without its costs. The computational intensity required by attention mechanisms to process each part of the input in detail translates into significant inefficiency on standard computing platforms such as CPUs and GPUs. This inefficiency arises from the vast amounts of data these models need to consider, leading to high resource consumption that often outweighs the benefits, especially in resource-constrained environments.

SpAtten’s Approach to Enhancing Efficiency

So, how does SpAtten propose to surmount these challenges? The paper introduces a series of algorithmic optimizations and a dedicated hardware architecture designed specifically to minimize the computational burden and memory footprint of attention mechanisms. These innovations are critical in transforming the theoretical benefits of attention mechanisms into practical, scalable solutions.

- Cascade Token and Head Pruning: This method involves selectively ignoring less relevant data, akin to skimming through text to extract the most crucial information. By focusing only on the most pertinent parts of the input, SpAtten significantly cuts down on unnecessary computations.

- Progressive Quantization: Similar to compressing an image to reduce file size without drastically affecting its quality, this technique simplifies the data processed by the model. This simplification allows for a more efficient computation by reducing the detail level when possible, without substantially impacting the model’s performance.

- Custom Hardware Architecture: To ensure that these algorithmic advancements are fully leveraged, SpAtten introduces a specialized hardware architecture optimized for executing its innovative algorithms. This synergy between hardware and software is pivotal in enhancing the efficiency and reducing the energy consumption of attention-based NLP models.

By addressing the inefficiencies at the heart of attention mechanisms, SpAtten not only reaffirms the value of these models but also paves the way for their broader application. It challenges the status quo, offering a glimpse into a future where advanced NLP technologies can be more widely adopted thanks to improved sustainability and practicality.

Background and Motivation

NLP tasks can be broadly categorized into two types: discriminative and generative tasks. Discriminative tasks require models to summarize input information and make predictions, such as in token-level classification, sentence-level classification, and regression. On the other hand, generative tasks involve both summarizing input information and generating new tokens, with language modeling and machine translation being prime examples.

In this context, BERT stands out for discriminative tasks, focusing solely on the summarization stage. Conversely, GPT-2 excels in generative tasks, incorporating both summarization and generation stages. The distinction between these stages is crucial. In summarization, input tokens are transformed into vectors and processed through a series of blocks, leveraging the attention mechanism to produce intermediate features. This process is batch-processed in GPUs, making summarization relatively faster. However, the generation stage in models like GPT-2 processes a single token at a time, significantly extending runtime due to the iterative nature of generating new tokens and the cumulative data handling involved.

Motivation: Addressing Inefficiencies

The inefficiency of attention inference on general-purpose platforms such as CPUs and GPUs becomes a critical concern. The authors provide a detailed analysis, highlighting that attention mechanisms, despite constituting only about 10% of the overall FLOPs, account for over 50% of the latency in model execution. This disproportion is largely due to the extensive data movements and complex memory operations required by the attention mechanism, which are not efficiently handled by CPUs and GPUs. These platforms are optimized for matrix multiplications but struggle with the attention mechanism’s intricate memory operations, leading to significant slowdowns.

In response to these inefficiencies, the paper proposes SpAtten, a novel approach aimed at enhancing the computational efficiency of attention mechanisms. By focusing on algorithmic optimizations such as cascade token pruning, head pruning, and progressive quantization, SpAtten aims to reduce unnecessary computation and memory access. The motivation is clear: by addressing the bottlenecks in attention inference, the authors seek to pave the way for more efficient NLP models that can run effectively on a wider range of hardware platforms, ultimately making advanced NLP technologies more accessible and practical for real-world applications.

The SpAtten paper introduces an efficient algorithm-architecture co-design that significantly enhances the performance and efficiency of attention mechanisms in NLP models. This advancement is achieved through key contributions in both algorithmic optimizations and hardware architecture, aimed at reducing computation and memory access while maintaining accuracy. Let’s dive into these contributions:

Key Contributions of SpAtten

Algorithmic Optimizations

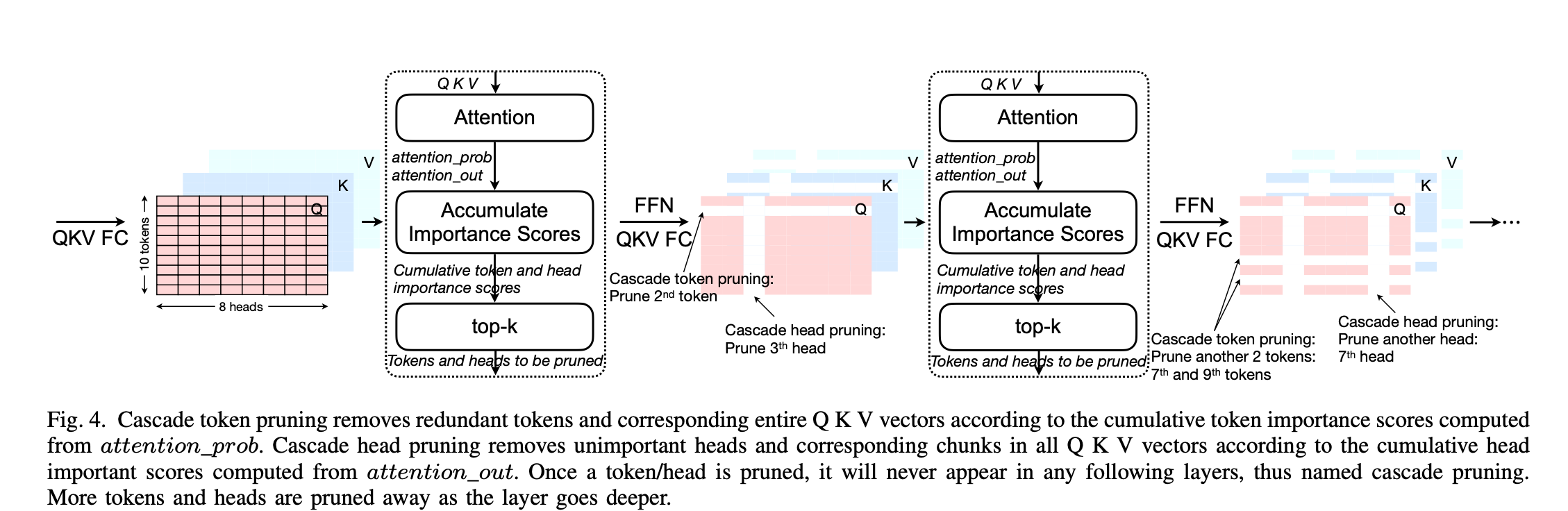

- Cascade Token and Head Pruning: SpAtten employs a novel approach to dynamically prune tokens and heads that are deemed less important for the final computation. This pruning happens in a cascade manner across the layers of the model, meaning that once a token or head is pruned, it is not considered in subsequent layers. This method significantly reduces the number of operations and memory accesses needed.

- Progressive Quantization: Recognizing that different parts of the input data contribute differently to the output, SpAtten applies a progressive quantization strategy. This approach adjusts the bit-width of the data based on its importance, allowing for more aggressive quantization (and thus reduced memory footprint) without sacrificing accuracy. Initially, only the most significant bits are considered, and additional bits are fetched only if necessary, based on a predefined threshold of attention probabilities.

- Local Value Pruning: In addition to token and head pruning, SpAtten introduces local value pruning, which selectively eliminates values based on their contribution to the output. This further optimizes the memory usage and computational requirements of the attention mechanism.

Hardware Architecture

To efficiently implement these algorithmic optimizations, SpAtten proposes a custom hardware architecture that includes:

- Top-k Engine: A high-throughput engine designed to quickly sort token and head importance scores, essential for the cascade pruning process. This engine ensures that the pruning decisions can be made rapidly, without becoming a bottleneck in the system.

- Specialized Memory Hierarchy and Fully-pipelined Datapath: The hardware design features a specialized memory hierarchy to accommodate the sparsity induced by pruning and a fully-pipelined datapath to maximize throughput. These design choices are critical for translating the theoretical savings from the algorithmic optimizations into real-world speedup and energy efficiency.

- Support for Progressive Quantization: The architecture is specifically tailored to support the progressive quantization process, with mechanisms to efficiently fetch and process only the necessary bits of data, thereby reducing memory bandwidth requirements.

Impact and Efficiency Gains

The co-design of these algorithmic optimizations and hardware architecture enables SpAtten to achieve significant reductions in DRAM access and computational requirements, leading to faster inference times and lower energy consumption for attention-based NLP models. The paper’s experiments demonstrate the effectiveness of SpAtten, showcasing substantial improvements over traditional methods and existing accelerators in terms of speedup, model size reduction, and energy efficiency.

By addressing the inefficiencies of attention mechanisms on general-purpose computing platforms, SpAtten opens the door to deploying advanced NLP models on a wider range of devices, including those with limited computational resources. This makes it a pivotal contribution to the field of NLP, promising to enhance the accessibility and applicability of state-of-the-art language models in real-world applications.

Algorithmic Optimizations

The algorithmic optimizations section of the SpAtten paper outlines several innovative techniques designed to enhance the efficiency of attention mechanisms in NLP models. Here’s a structured overview of these optimizations:

Cascade Token Pruning

Cascade Token Pruning is a dynamic process aimed at reducing the computational load by identifying and removing tokens (words or subwords) that contribute minimally to the output of the attention mechanism. This pruning is based on the tokens’ attention probabilities, which reflect their importance. The method involves accumulating these probabilities across layers to determine each token’s cumulative importance score. Tokens with lower scores are deemed less important and are pruned away, resulting in a reduced sequence length for subsequent layers. This approach not only decreases the number of computations but also lessens memory access requirements.

Cascade Head Pruning

Similar to token pruning, Cascade Head Pruning targets the multi-head attention mechanism integral to models like Transformers. In these models, multiple “heads” independently process the input to capture different aspects of the data. However, not all heads contribute equally to the final output. By evaluating the output magnitude of each head, this optimization identifies and prunes the less critical heads. This pruning further streamlines the model by focusing computational resources on the most informative aspects of the input data, enhancing efficiency without compromising model performance.

Local Value Pruning

Building on the concept of pruning less relevant information, Local Value Pruning operates on the “Value” vectors in the attention mechanism. After the attention probabilities are computed, this technique prunes away Vectors (Values) that are least likely to be selected based on the computed attention scores. This step is another measure to cut down on unnecessary computations and memory usage, ensuring that only the most pertinent information is processed and stored.

Progressive Quantization

Addressing the precision of the data being processed, Progressive Quantization is a method to dynamically adjust the bit-width of numerical representations based on their necessity for the computation. The insight here is that not all data require high precision to maintain model accuracy. By initially processing data with a lower bit-width and only increasing precision when needed (based on predefined thresholds of attention probabilities), this technique effectively reduces memory bandwidth and storage requirements. It’s a strategic trade-off between computational complexity and memory efficiency, allowing for more agile data processing.

Hardware Architecture

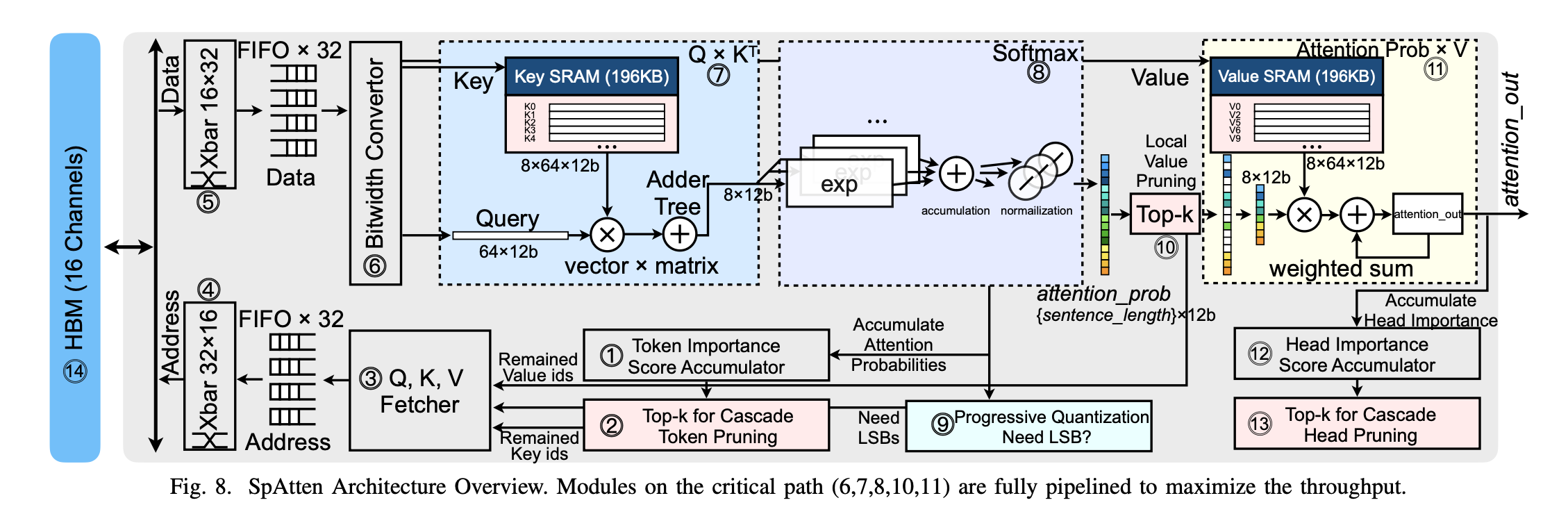

The hardware architecture of SpAtten is meticulously designed to support the algorithmic optimizations discussed earlier, aiming at enhancing the efficiency of attention mechanisms in NLP models. Let’s explore the sophisticated components and strategies that form the backbone of SpAtten’s hardware design:

- Top-K Engine: A novel component designed to rank token and head importance efficiently. This engine is pivotal for the cascade token and head pruning process, determining which tokens and heads are essential for further processing. By reducing computation and memory traffic, the top-k engine addresses the random access challenge, employing a crossbar to manage address processing and enhance bandwidth utilization.

- Bitwidth Converter: To facilitate progressive quantization, an on-chip bitwidth converter adjusts the fetched bits’ size, ensuring compatibility between DRAM data and on-chip processing requirements. This converter plays a crucial role in managing data precision and is essential for maintaining the balance between computational efficiency and model accuracy.

- Query-Key Multiplication Module: This module, equipped with a reconfigurable adder tree, is responsible for the matrix-vector multiplication between keys (K) and queries (Q), a fundamental operation in attention mechanisms. Its design allows for the processing of multiple attention scores simultaneously, optimizing the use of available computational resources.

- Softmax and Progressive Quantization Modules: After the query-key multiplication, attention scores are normalized and processed through these modules to determine the final attention probabilities. The progressive quantization module, in particular, decides whether additional bits (LSBs) need to be fetched based on the computed probabilities, trading off computation for reduced memory access.

Data Fetcher and Crossbar

The architecture includes a sophisticated data fetcher that handles multiple random read requests across all HBM (High Bandwidth Memory) channels, ensuring efficient data retrieval for Q-K-V (Query-Key-Value) operations. A 32-to-16 crossbar facilitates this process, routing read requests to the appropriate channels and optimizing memory access patterns.

Efficiency and Parallelism

SpAtten’s design emphasizes efficiency and parallelism, with the top-k engine supporting high-throughput selection of important tokens and heads. The architecture is fully pipelined, ensuring that every component, from the data fetcher to the bitwidth converter and the query-key multiplication module, operates at maximum efficiency. This pipelining is crucial for translating SpAtten’s algorithmic optimizations into tangible speedup and energy savings in real-world applications.

Impact on NLP Model Performance

By addressing the inefficiencies of traditional attention mechanisms and optimizing both the computational and memory access patterns, SpAtten’s hardware architecture significantly enhances the performance of NLP models. It enables faster inference times and reduced energy consumption, making it feasible to deploy advanced language models on a broader range of devices, including those with limited computational resources.

In summary, SpAtten’s hardware architecture is a testament to the power of algorithm-architecture co-design. It showcases how tailored hardware components and strategic optimizations can overcome the challenges of implementing advanced NLP models, paving the way for more efficient and accessible language processing technologies.

Evaluation Methodology

SpAtten’s performance was rigorously evaluated using SpinalHDL for simulation and Ramulator for HBM modeling. The evaluation compared SpAtten against a variety of hardware platforms such as NVIDIA TITAN Xp GPU, NVIDIA Jetson Nano, Intel Xeon E5-2640 v4 CPU, and ARM A53 CPU on a Raspberry Pi-4, as well as against state-of-the-art accelerators like A3 and MNNFast. The benchmarks spanned across discriminative and generative models, including BERT-Base, BERT-Large, GPT-2-Small, and GPT-2-Medium, covering a total of 30 tasks. The methodology focused on measuring latency, power, and the effectiveness of SpAtten’s pruning and quantization techniques in reducing computational workload and memory access.

Experimental Results

Throughput, Power, and Area

SpAtten demonstrated significant improvements in pruning effectiveness, computation reduction, and DRAM access reduction across both BERT and GPT-2 models. Specifically, it achieved a 1.61 TFLOPS on BERT models and 0.43 TFLOPS on GPT-2 models, highlighting its efficiency in handling both computation-bound and memory-bound tasks. SpAtten’s power consumption was measured at 8.30W, and the architecture occupied an area of 18.71mm^2, indicating a compact and energy-efficient design. Notably, the Q×K and Attention Prob×V modules were identified as the most computationally intensive, benefiting the most from local V pruning and other optimizations.

Comparison with CPUs and GPUs

SpAtten outperformed the NVIDIA TITAN Xp GPU, Xeon CPU, Nano GPU, and Raspberry Pi ARM CPU by significant margins in terms of speedup and energy efficiency. The results highlighted the advantage of SpAtten’s parallelized and pipelined datapath, alongside the substantial impact of its algorithmic optimizations such as cascade pruning and progressive quantization on reducing computation and DRAM access. These optimizations contributed to SpAtten’s superior performance, particularly in the context of advanced NLP tasks.

Comparison with Other Accelerators

Comparing SpAtten with A3 and MNNFast revealed that while A3 and MNNFast explored sparsity, they did not achieve the same level of DRAM access reduction or computational efficiency. SpAtten’s global and cascade token pruning, in conjunction with head pruning and progressive quantization, allowed it to accelerate both attention and feed-forward network (FFN) computations effectively. These features, along with SpAtten’s support for a broader range of models beyond BERT, underscored its comprehensive approach to accelerating attention mechanisms.

The evaluation results section confirms SpAtten’s effectiveness in enhancing the performance and efficiency of attention-based NLP models. Through a combination of innovative algorithmic optimizations and a custom hardware architecture, SpAtten significantly reduces computation and memory requirements, setting a new standard for NLP accelerators. The detailed performance analysis, comparing SpAtten with existing CPUs, GPUs, and specialized accelerators, demonstrates its potential to facilitate the broader adoption and application of advanced NLP models across a range of devices and platforms.