Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity

At the heart of today’s AI research, scale emerges as a monumental frontier. Observations have illuminated a simple yet profound truth: uncomplicated architectures, when backed by substantial computational resources, expansive datasets, and a vast number of parameters, consistently outshine their more complex counterparts. This naturally leads us to ponder a critical question: how can we train larger-scale models without sacrificing computational efficiency? The paper in discussion introduces an intriguing solution—leveraging sparsity and hard routing to significantly expand a model’s parameters while maintaining constant FLOPs per forward pass. The concept of a Mixture-of-Experts, first unveiled in a pioneering 2017 Google Brain study, marked a departure from conventional wisdom. Traditionally, machine learning models apply the same parameters across all inputs. In contrast, the Mixture of Experts paradigm selects distinct parameters for each input, challenging the status quo. This innovative approach has since been woven into the fabric of nearly every leading-edge model today, including Mistral (Mistral 8x7B) and GPT-4, signaling its transformative impact on the field.

Introduction and background

This paper introduces a major leap in the ability to scale up model parameters without a corresponding increase in computational demand. It explores the potential of using extremely large models within the limitations of existing hardware by introducing the “Switch Transformer” concept. This approach addresses the significant computational costs usually associated with scaling up transformer-based models by incorporating sparsity and a mechanism called Mixture-of-Experts (MoE). Unlike traditional methods that use the same parameters for all inputs, MoE models choose specific parameters for each input, a novel approach that has been refined in this work.

The study goes beyond theory to show how it’s now feasible to train models with trillions of parameters, overcoming previous hurdles such as computational inefficiency, complexity, and training instability. It simplifies the MoE routing algorithm, reducing both communication and computational overhead, and introduces training techniques that stabilize the training of these large models in lower precision formats.

Key Contributions of the Paper:

- Switch Transformer Architecture: Enhancing the MoE model for more efficient scaling.

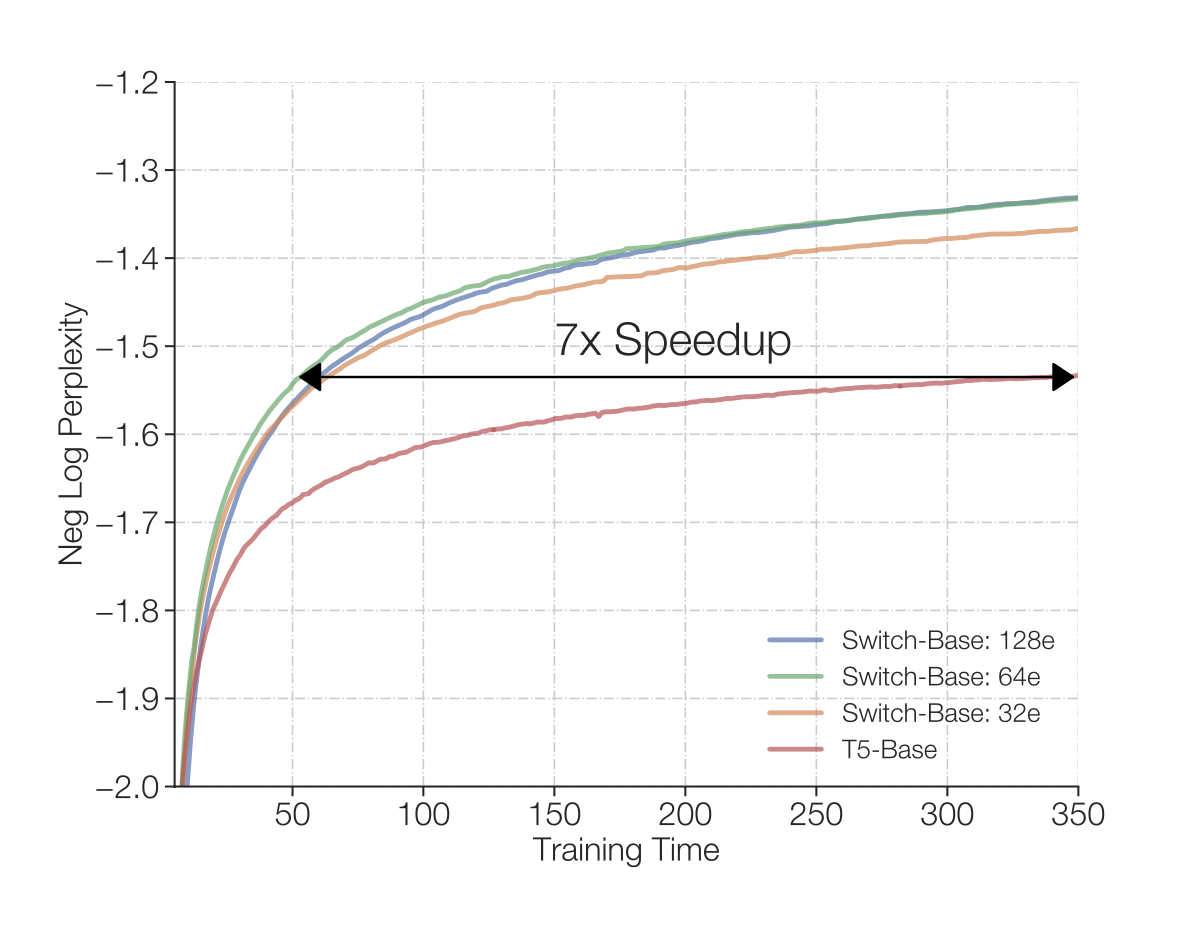

- Performance Benchmarks: Demonstrating up to 7x faster pre-training speeds on the same computational budget compared to the T5 model.

- Model Distillation: Successfully condensing large, sparse models into smaller, dense models without significantly losing performance.

- Training Techniques: Introducing improved pre-training and fine-tuning methods, including selective precision training and a new initialization scheme for more experts.

- Multilingual Improvements: Showing universal improvements across 101 languages, proving the model’s effectiveness in various linguistic contexts.

- Scaling to a Trillion Parameters: Training models up to a trillion parameters, achieving a 4x speedup in pre-training efficiency compared to the T5-XXL model.

These contributions highlight the paper’s importance in pushing forward the scalability and efficiency of AI models, offering practical insights and methodologies for training larger models more effectively.

The Switch Transformer

Core Concept of Switch Transformers

Switch Transformers introduce a method to scale model parameters significantly while managing computational demands efficiently. The core concept is sparse routing, where inputs are directed through specific pathways or “experts” based on their characteristics, instead of engaging the entire model for every input. This approach reduces computational load and allows for larger model scales without linear increases in computation.

The efficiency of sparse routing is achieved through a dynamic algorithm that assigns inputs to the most relevant experts. The model integrates this with a set of specialized experts and a mechanism for combining their outputs, ensuring effective use of computational resources.

Training these large models involves novel techniques to maintain stability and efficiency, including specific learning rate adjustments, regularization methods tailored for sparse architectures, and adaptive training strategies. These elements collectively enable the Switch Transformer to learn effectively from vast datasets while maintaining a manageable computational footprint, offering a practical solution for developing and training large-scale AI models.

Architecture

The Switch Transformer architecture innovatively scales up the parameter count of Transformer models in a computationally efficient manner. This approach was inspired by the exhaustive study of the benefits of scale, revealing power-law scaling with model size, dataset size, and computational budget. Central to the Switch Transformer’s design is the goal of increasing parameter count while keeping floating point operations (FLOPs) per example constant, thereby addressing a separate and crucial scaling axis.

A key element in this architecture is the Mixture-of-Expert (MoE) routing mechanism, simplified for efficiency. The MoE layer takes a token representation (x) and routes it to the top-k determined experts out of a set ${E_i(x)}_{i=1}^{N}$ of $N$ experts. The routing is determined by the router variable $W_r$ producing logits $h(x) = W_r \cdot x$, which are normalized via a softmax distribution over the $N$ experts. The probability of routing to expert (i) is given by the equation:

$p_i(x) = \frac{e^{h(x)i}}{\sum{j=1}^{N}e^{h(x)_j}}$

This formula ensures that the input is directed to the most relevant expert, based on the routing logic’s output. The output yy from the mixture-of-experts (MoE) layer is computed as a weighted sum of the outputs from all the experts, where the weights are determined by the routing probabilities. This is crucial for integrating the expert contributions back into the model’s workflow. The equation for computing the output $y$ is as follows:

$y=∑p_i(x)E_i(x)$

Switch Transformers introduce a significant shift by simplifying this routing to select only a single expert per token, which reduces routing computation and enhances performance. This singular routing strategy, or “Switch” layer, not only simplifies the model’s architecture but also significantly reduces the computational load.

Furthermore, the architecture’s efficiency is bolstered by distributed training setups where the model’s sparsely activated layers distribute unique weights across different devices. This distribution allows the model’s weight to increase with the number of devices without overwhelming the memory and computational resources of each device.

In essence, the Switch Transformer architecture employs a blend of simplified sparse routing, efficient use of hardware, and distributed training strategies to achieve scalability and computational efficiency. This framework supports the training of models with an unprecedented number of parameters, paving the way for advancements in large-scale machine learning applications.

Data, Model, and Expert Parallelizm

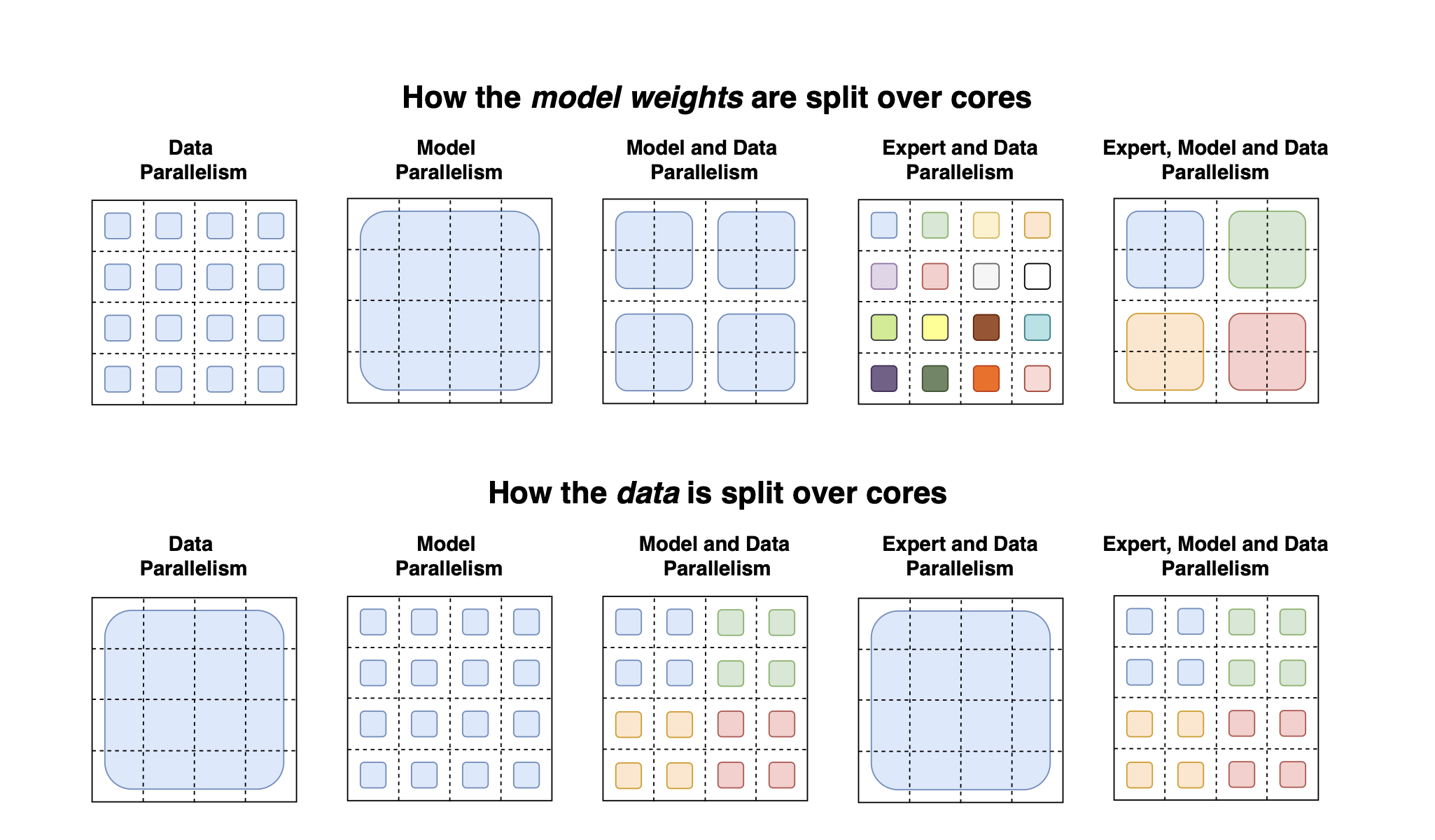

The authors propose a sophisticated strategy for scaling Transformer models by harnessing parallel computing across data, models, and experts. This approach addresses the challenge of enhancing model capacity without hitting the performance bottleneck commonly associated with linear parameter scaling. Rather than merely expanding the model size, the authors advocate for a multifaceted scaling strategy that incorporates parallelism to optimize computational resources.

In their exploration, the authors identify that simply adding more experts to the model linearly increases the parameter count but can lead to diminishing performance returns. To circumvent the limitations imposed by hardware memory when scaling models traditionally, they introduce a blend of data, model, and expert-parallelism. This blend involves distributing the model’s workload across multiple processing units to manage its expanding complexity and size efficiently.

Data parallelism is achieved by replicating the model across all cores, with each core processing a different subset of the data, effectively leveraging multiple cores to train on large datasets simultaneously. Model parallelism, on the other hand, involves splitting the model’s parameters across different cores, necessitating communication between cores to synchronize the distributed parts of the model. Expert-parallelism is particularly tailored to the Mixture of Experts (MoE) layer, allocating different experts to different cores, thereby scaling the model’s capacity by increasing the number of experts without overburdening each core with additional computational demands.

To illustrate the application of these parallelism strategies, the authors discuss the partitioning of the Transformer’s Feed-Forward Network (FFN) layer across cores, employing Mesh TensorFlow for the practical implementation of this partitioning. This method allows for the efficient scaling of models to sizes previously unattainable, by making full use of the hardware’s capacity, balancing computational load, and minimizing the necessity for communication between cores.

By integrating data, model, and expert-parallelism, the authors navigate the complexities of scaling Transformer models, demonstrating how to effectively increase model size and capacity. This approach enables the training of models with trillions of parameters, significantly advancing the capabilities of natural language processing and broader AI applications.

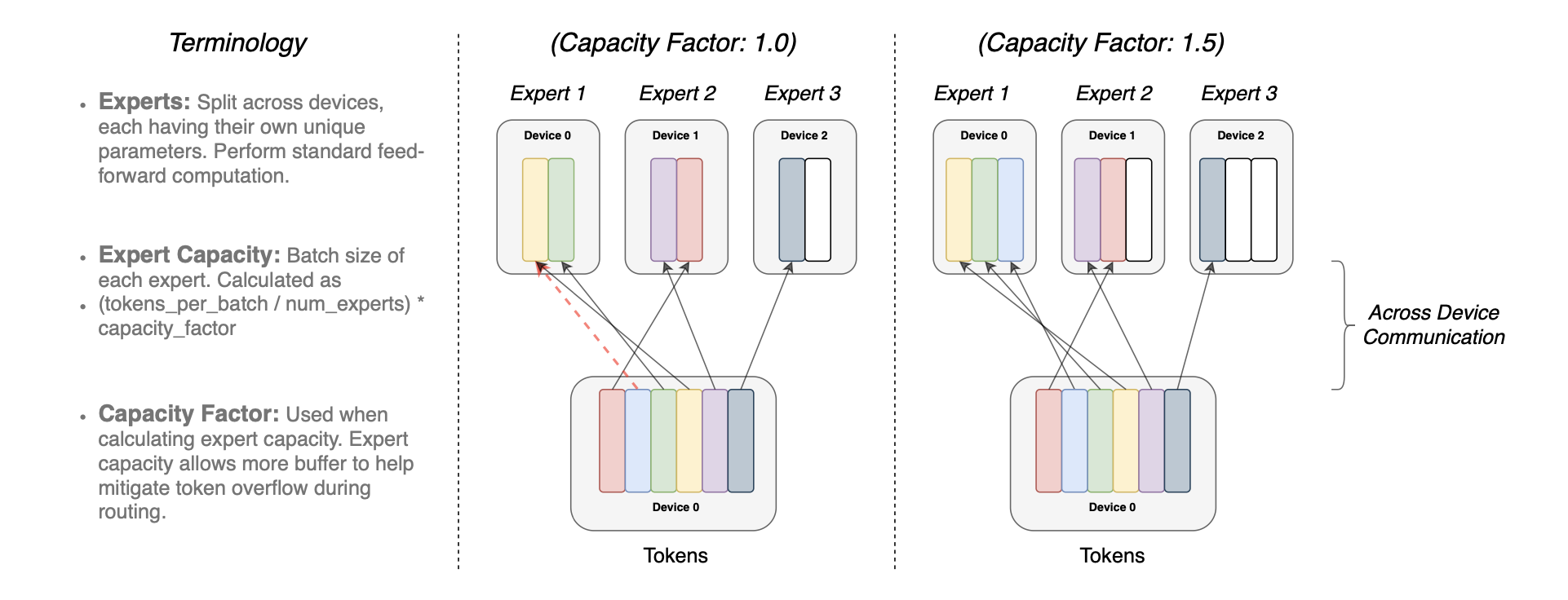

The authors revisit the concept of Mixture-of-Experts (MoE) in the context of Switch Routing, challenging the previously held notion that routing to more than one expert is necessary for effective learning and non-trivial gradients. Contrary to earlier studies that suggested the importance of routing to multiple experts for model training and gradient flow, the authors propose a simplified strategy that routes each token to only a single expert. This approach, referred to as a Switch layer, is shown to preserve model quality while reducing the complexity of routing computation and improving overall performance.

The benefits of employing a single expert routing strategy include a reduction in router computation since each token is directed to only one expert, thereby halving the batch size (or expert capacity) needed for each expert. This simplification not only reduces the computational burden but also simplifies the routing implementation and diminishes communication costs. The concept of a capacity factor plays a crucial role here, as it allows for the adjustment of expert capacity to manage token overflow effectively. A higher capacity factor can mitigate overflow issues but at the cost of increased computation and communication.

Furthermore, the authors address the differentiation of the router, emphasizing that the gate value, $p_i(x)$, in their formulation allows for the differentiation of routing decisions. This aspect is crucial for training the model, as it ensures that the routing mechanism itself can learn and adapt based on the training data, thus optimizing the allocation of tokens to experts over time.

In essence, Switch Routing as proposed by the authors represents a significant rethinking of the MoE paradigm. It simplifies the complexity associated with expert routing by focusing on a single-expert routing mechanism, thereby enhancing efficiency and model performance. This approach underscores the authors’ broader objective of scaling Transformer models efficiently, by introducing sparsity and parallelism without compromising on model quality or increasing computational demands disproportionately.

The formula provided by the authors for calculating expert capacity is as follows:

$expert\ capacity=\frac{(tokens\ per\ batch)}{(number\ of\ experts)}×capacity\ factor$

Results

Scaling Properties

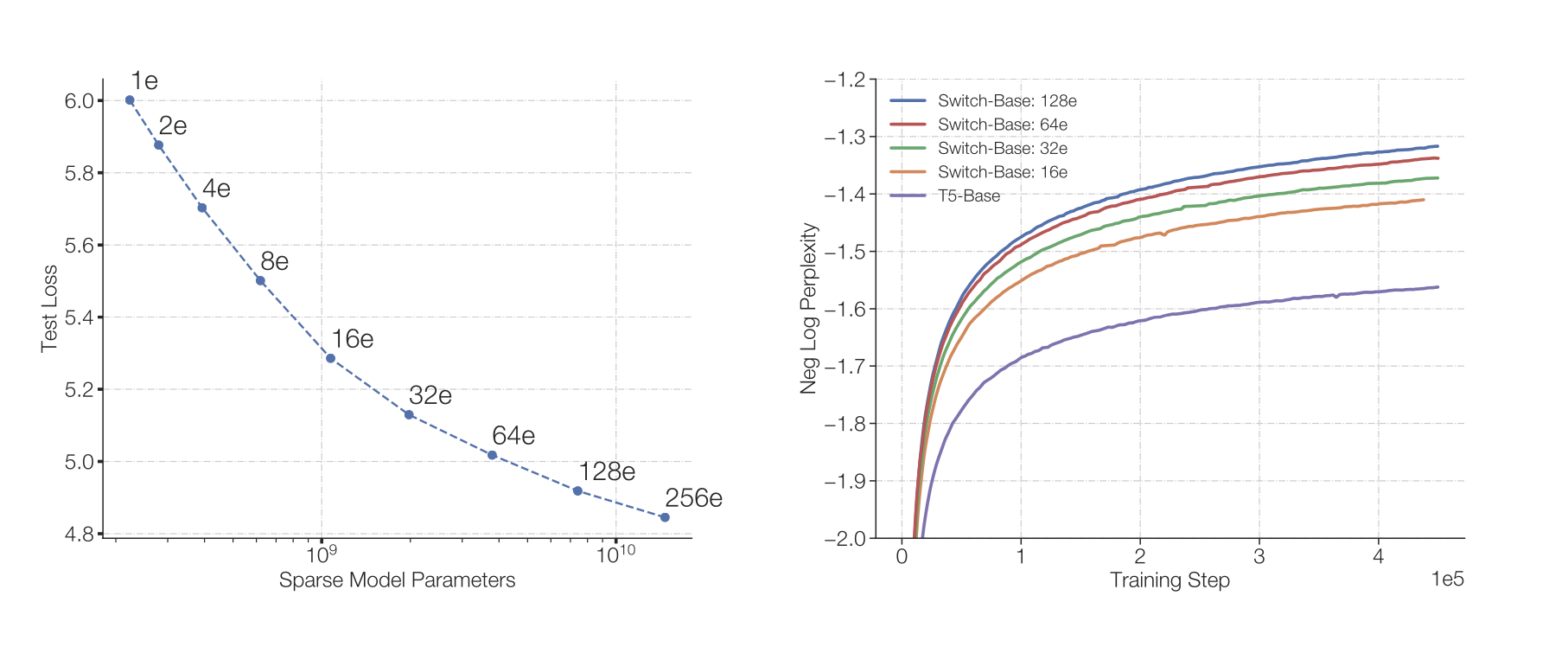

In the section on scaling properties, the authors delve into the empirical analysis of how the Switch Transformer models scale with respect to various factors, including the number of parameters, computational efficiency, and model performance. The discussion is anchored around a series of experiments designed to understand the relationship between model size, computational resources, and performance outcomes.

The authors present findings that illustrate the benefits of scaling up the number of parameters in a model. Through their experiments, they demonstrate that larger models, when trained with an adequate computational budget and dataset size, consistently outperform smaller models. This relationship is quantified in a series of graphs and figures, which detail the performance improvements across different model sizes and configurations.

One key aspect highlighted is the efficiency gains achieved by the Switch Transformer through its sparse routing and expert parallelism. The authors show how these architectural innovations allow for the linear scaling of parameters with a sublinear increase in computational cost. This is a significant departure from traditional dense models, where parameter scaling typically results in a proportional increase in computational requirements.

Figures included in the section provide a visual representation of these scaling properties, showcasing the performance gains as models scale up in size. These visuals help illustrate the diminishing returns on model performance as the number of parameters increases, highlighting the importance of balancing model size with computational efficiency.

Furthermore, the authors explore the concept of “capacity factor” within the context of scaling, demonstrating how adjusting this factor influences model performance and resource utilization. By fine-tuning the capacity factor, they show how models can be optimized to achieve better performance without unnecessarily increasing computational costs.

The scaling properties section concludes with insights into the optimal strategies for scaling up Transformer models, offering guidance on how to leverage the Switch Transformer’s architectural features for efficient and effective model scaling. The detailed explanation of these properties, supported by empirical data and visual aids, provides a comprehensive understanding of the factors influencing the performance and efficiency of large-scale Transformer models.

Fig. 4 Scaling Effects in Switch Transformers: The left plot tracks perplexity improvement with increasing parameters by doubling the number of experts from 2 up to 256, contrasting a T5-Base model (223M parameters) with a 14.7B parameter model. Despite equal computational budgets, more experts consistently enhance performance. The right plot compares negative log perplexity across expert counts, highlighting the Switch-Base models’ superior sample efficiency against a dense baseline (purple line).

Fig. 5 Speed Gains with Switch Transformers: Trained on 32 TPUv3 cores with consistent FLOPs per example, Switch Transformers outpace dense Transformer models in efficiency. The 64-expert Switch-Base model reaches T5-Base quality seven times faster, showcasing continued performance enhancements with fixed computational resources.

Downstream Tasks

The authors validate the effectiveness of Switch Transformers across a variety of natural language processing tasks, illustrating how the architectural advancements translate into improved performance in practical applications.

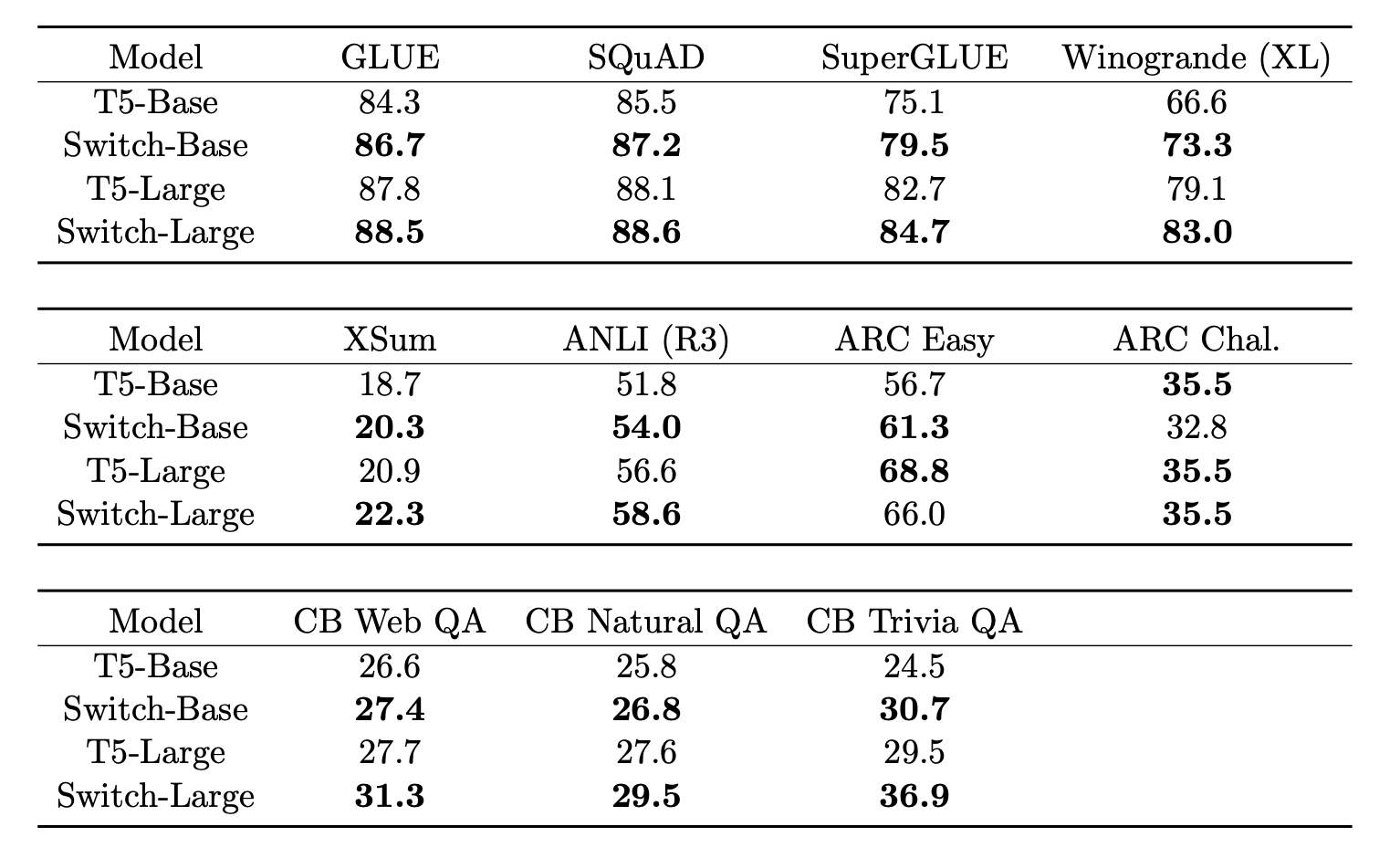

Fine-Tuning on Diverse NLP Tasks: The authors begin by fine-tuning the Switch Transformer models on a wide array of NLP tasks, including question answering, summarization, and tasks that require deep language understanding and reasoning. They leverage well-established benchmarks like GLUE, SuperGLUE, SQuAD, and others to assess the models’ capabilities. The tasks cover a broad spectrum, from sentiment analysis and sentence similarity to natural language inference and more complex reasoning challenges.

Performance Improvements: The fine-tuning results are impressive, with Switch Transformers showing significant improvements over the baseline models. For instance, in SuperGLUE, a benchmark that aggregates multiple challenging tasks, Switch Transformer variants outperform the T5-Base and T5-Large models by notable margins. This trend is consistent across other tasks as well, where the Switch models achieve superior performance, underscoring their effectiveness in leveraging the large-scale parameterization for enhanced language understanding.

Distillation Results: Recognizing the practical challenges of deploying massively parameterized models, the authors also explore model distillation as a means to compress the knowledge of large Switch Transformers into more manageable, dense models. The distillation techniques discussed show promising results, enabling the preservation of a significant portion of the performance gains while drastically reducing the model size. This makes it feasible to deploy the advanced capabilities of Switch Transformers in environments with stringent resource constraints.

Multilingual Learning: Further extending their evaluation, the authors demonstrate the multilingual capabilities of Switch Transformers by training on a dataset covering 101 languages. The results highlight the model’s proficiency in handling diverse linguistic inputs, showing substantial improvements across all languages when compared to baseline models. This reinforces the versatility of Switch Transformers, making them suitable for a wide range of applications beyond English-centric tasks.

In summary, the author demonstration of the Switch Transformer’s superior performance across a diverse set of NLP challenges. It showcases the model’s ability to not only excel in language understanding and reasoning tasks but also its adaptability to multilingual contexts and the feasibility of its deployment through effective distillation strategies.

Fig. 6 Fine-tuning results

Miscellaneous and Conclusion

Throughout the paper, the authors engage in various discussions that shed light on the nuanced aspects of the Switch Transformer model and its implications for the broader field of machine learning and natural language processing:

-

One key discussion revolves around the exploration-exploitation dilemma in routing decisions. The authors compare different strategies for expert selection, including deterministic selection and sampling, highlighting the trade-offs between exploration and exploiting known good paths. This exploration is crucial for balancing the load across experts and ensuring that the model remains versatile and adaptable to different inputs.

-

Another important discussion is on the model’s effectiveness across different compute regimes. The authors demonstrate that the Switch Transformer architecture scales gracefully, not only in high-resource environments with thousands of cores and trillions of parameters but also in more constrained settings. They show that even small-scale implementations of the model with just a few experts can yield significant performance improvements over traditional dense models, making the approach versatile and applicable in a wide range of scenarios.

-

The relationship between pre-training performance and downstream task results is also examined. The authors present data showing that improvements in pre-training lead to better performance on downstream tasks, emphasizing the importance of effective pre-training strategies. However, they also note that the translation of pre-training improvements to downstream success is not always straightforward, suggesting areas for future research.

In conclusion, the paper underscores the transformative potential of Switch Transformers in pushing the boundaries of model scaling and computational efficiency. By innovatively applying sparse routing and expert parallelism, the authors present a compelling case for rethinking traditional approaches to model architecture and training. The discussions within the paper highlight the model’s flexibility across different scales and its ability to achieve state-of-the-art performance on a range of tasks, setting the stage for future advancements in the field.